Server-side analytics in .NET Core

Google Analytics is a good tool for analyzing your website audience, but it uses client-side code (JS), which is not always reliable, because you don’t control what’s happening there, and users can simply disable GA scripts (web-browsers even start to block such tracking out-of-the-box).

More reliable way to analyze your visitors would be a server-side analytics.

Why to use server-side analytics

I mean, come on, you own the server, so your analytics numbers will be much more precise (or actually, absolutely correct) - it’s just inevitable, because every request goes through your server, and you can count and analyze them. On top of that you won’t be dependent on a 3rd-party instance, so you won’t share your (and your visitors) data with it.

Speaking about numbers being more precise. Right now GA tells me that there were 3 visitors on my website today. Whereas according to my analytics I had 34 visitors without bots and 45 in total.

There are downsides though.

First one is the increased workload on your server. The more visitors you have (requests per second), the more loaded your server will be (database, mostly). And if you have quite a large daily audience, you might simply run out of hardware resources to process all that. But if you do have a large daily audience, then I guess you can spend some money on your infrastructure.

Another disadvantage of your own analytics comparing with the GA scripts is that you’ll have to do lots of things yourself. For example, separating real human visitors from web-crawlers/robots or getting geography of your visitors based on their IP-addresses (by the way, several users can have the same IP-address). But we’re not so easily scared by the difficulties, are we.

So let’s see how to analyze your website visitors in .NET Core MVC project.

Adding analytics in .NET Core

This can be done by using a custom middleware.

Custom middleware

If you don’t know what middleware is, Microsoft has a good documentation about that.

But I can explain basics a bit faster. You must remember that scene from The 13th Warrior, where vikings were sitting at the table and passing a bowl with water in a quite peculiar manner: everyone was washing from it and then spitting and blowing their nose in it, so every viking next in chain was getting bowl with more and more disgusting contents. In our case this bowl is a web-request, and vikings are middlewares.

Request (bowl) goes to the first middleware (viking), gets processed (spitted into), then goes to the next middleware (next viking), and so on. The last middleware (viking) in the chain/queue gets absolutely horrible bowl full of nasty things, does his part and sends it back via the same queue/chain (so it goes in reverse order). Others can add something to the bowl (now it’s a response) on its way back, but they prefer not touch it much.

So, what we will be doing here is adding a new middleware - a new viking to sit at the table, wash from the bowl and spit into it.

Take a look at your Startup.cs file. You’ll see something like this:

public void Configure(

IApplicationBuilder app,

IHostingEnvironment env,

ILoggerFactory loggerFactory

)

{

// ...

app.UseStaticFiles(); // middleware #1

app.UseStatusCodePages(); // middleware #2

app.UseAuthentication(); // middleware #3

// ...

}These app.UseSOMETHING() are middlewares. Their order is important, so UseStaticFiles() middleware gets the request first, then UseStatusCodePages(), then UseAuthentication(), and so on.

So where should we put our middleware? Of course, in the very beginning of this chain/queue, so every request is counted:

public void Configure(

IApplicationBuilder app,

IHostingEnvironment env,

ILoggerFactory loggerFactory

)

{

// ...

app.UseAnalytics(); // now this is middleware #1

app.UseStaticFiles(); // middleware #2

app.UseStatusCodePages(); // middleware #3

app.UseAuthentication(); // middleware #4

// ...

}Now we need to actually implement it. Create the Middleware/AnalyticsMiddleware.cs file in your project:

namespace YOUR-PROJECT.Middleware

{

public class AnalyticsMiddleware

{

private readonly RequestDelegate _next;

private readonly ILogger _logger;

private string _connectionString { get; set; }

private readonly IConfiguration _configuration;

public AnalyticsMiddleware(

RequestDelegate next,

ILoggerFactory loggerFactory,

IConfiguration configuration

)

{

_next = next;

_logger = loggerFactory.CreateLogger<AnalyticsMiddleware>();

_configuration = configuration;

_connectionString = _configuration.GetConnectionString("DefaultConnection");

}

public async Task InvokeAsync(HttpContext context)

{

try

{

StringBuilder info = new StringBuilder($"Got a request{Environment.NewLine}---{Environment.NewLine}");

info.Append($"- remote IP: {context.Connection.RemoteIpAddress}{Environment.NewLine}");

info.Append($"- path: {context.Request.Path}{Environment.NewLine}");

info.Append($"- query string: {context.Request.QueryString}{Environment.NewLine}");

info.Append($"- [headers] ua: {context.Request.Headers[HeaderNames.UserAgent]}{Environment.NewLine}");

info.Append($"- [headers] referer: {context.Request.Headers[HeaderNames.Referer]}{Environment.NewLine}");

_logger.LogWarning(info.ToString());

}

catch (Exception ex)

{

// we don't care much about exceptions in analytics

_logger.LogError($"Some error in analytics middleware. {ex.Message}");

}

await _next(context);

}

}

public static class AnalyticsMiddlewareExtensions

{

public static IApplicationBuilder UseAnalytics(this IApplicationBuilder builder)

{

return builder.UseMiddleware<AnalyticsMiddleware>();

}

}

}Now every time your server (website) gets a request, you’ll see the following in your log:

WARN [2018-07-27 21:13:19] Got a visitor

---

- remote IP: 127.0.0.1

- path: /some/path

- query string: ?some=param

- [headers] ua: Mozilla/5.0 (Macintosh; Intel Mac OS X 10.13; rv:62.0) Gecko/20100101 Firefox/62.0

- [headers] referer: http://some-sourceBut such a log-wall is rather hard to analyze, better to store this data in a database instead:

using (MySqlConnection sqlConn = new MySqlConnection(_connectionString))

{

sqlConn.Open();

MySqlCommand cmd = new MySqlCommand("INSERT INTO analytics(dt, ip, path, query, referer, ua) VALUES(@dt, @ip, @path, @query, @referer, @ua);", sqlConn);

cmd.Parameters.AddWithValue("@dt", DateTime.Now);

cmd.Parameters.AddWithValue("@ip", context.Connection.RemoteIpAddress);

cmd.Parameters.AddWithValue("@path", context.Request.Path);

cmd.Parameters.AddWithValue("@query", context.Request.QueryString);

cmd.Parameters.AddWithValue("@referer", context.Request.Headers[HeaderNames.Referer]);

cmd.Parameters.AddWithValue("@ua", context.Request.Headers[HeaderNames.UserAgent]);

cmd.ExecuteNonQuery();

}And here’s the table for that:

CREATE TABLE `analytics` (

`id` bigint(11) unsigned NOT NULL AUTO_INCREMENT,

`dt` datetime NOT NULL,

`ip` varchar(45) NOT NULL DEFAULT '',

`path` varchar(250) NOT NULL DEFAULT '',

`query` varchar(500) DEFAULT NULL,

`referer` varchar(500) DEFAULT NULL,

`ua` varchar(250) NOT NULL,

PRIMARY KEY (`id`)

);Oh, by the way, since you’re now collecting and storing your users data directly - it would be a good idea if you described that in your /privacy.txt.

How to deal with a reverse proxy

If your Kestrel is running behind a reverse proxy (which is recommended), then all the IP addresses you get from it by default are 127.0.0.1. To forward real IP addresses from the reverse proxy to your website you need to:

- Configure the proxy (NGINX in my case);

- Configure the .NET Core application (your website).

Here’s a manual from Microsoft.

Start with NGINX by nano /etc/nginx/sites-available/WEBSITE:

location / {

proxy_pass http://localhost:5000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection keep-alive;

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

# forward real IP address

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}And then add a UseForwardedHeaders() middleware (yes, yet another, but this time a standard one) in Startup.cs before your UseAnalytics() middleware:

// ...

// get real IP from reverse proxy

app.UseForwardedHeaders(new ForwardedHeadersOptions

{

ForwardedHeaders = ForwardedHeaders.XForwardedFor | ForwardedHeaders.XForwardedProto

});

// our custom analytics middleware

app.UseAnalytics();

// ...Now you will be getting real IP addresses forwarded from NGINX.

Analyzing collected data

Soon enough you’ll discover, that quite a portion of requests:

- Are for some not that interesting resources like

/favicon.ico,/css/*,/fonts/*, etc; - Are sent by search-crawlers and other robots.

That trashes your analytics data a bit. But you can improve the situation.

First type of requests (not interesting resources) can be filtered by adding the following RegExp check:

if (!Regex.IsMatch(

context.Request.Path,

@"(^\/css\/.*)|(^\/fonts\/.*)|(^\/images\/.*)|(^\/js\/.*)|(^\/lib\/.*)|(^\/favicon(-.*|.ico$))|(^\/robots.txt$)|(^\/rss.xml$)",

RegexOptions.IgnoreCase

))

{

// only requests for "good" resources get processed here

}Second type of requests (robots) cannot be filtered out reliably enough. The only thing you can do about here is to check the User-Agent, but that is not reliable as you cannot know all the possible values for crawlers, and on top of that users can modify User-Agent value in their browsers. So you can only approximately assume that if this value contains /bot substring, then likely it is a bot:

if (Regex.IsMatch(

context.Request.Headers[HeaderNames.UserAgent],

@"(\/bot)|(bot\/)",

RegexOptions.IgnoreCase

))

{

// do something with it, or actually simply don't analyze such requests

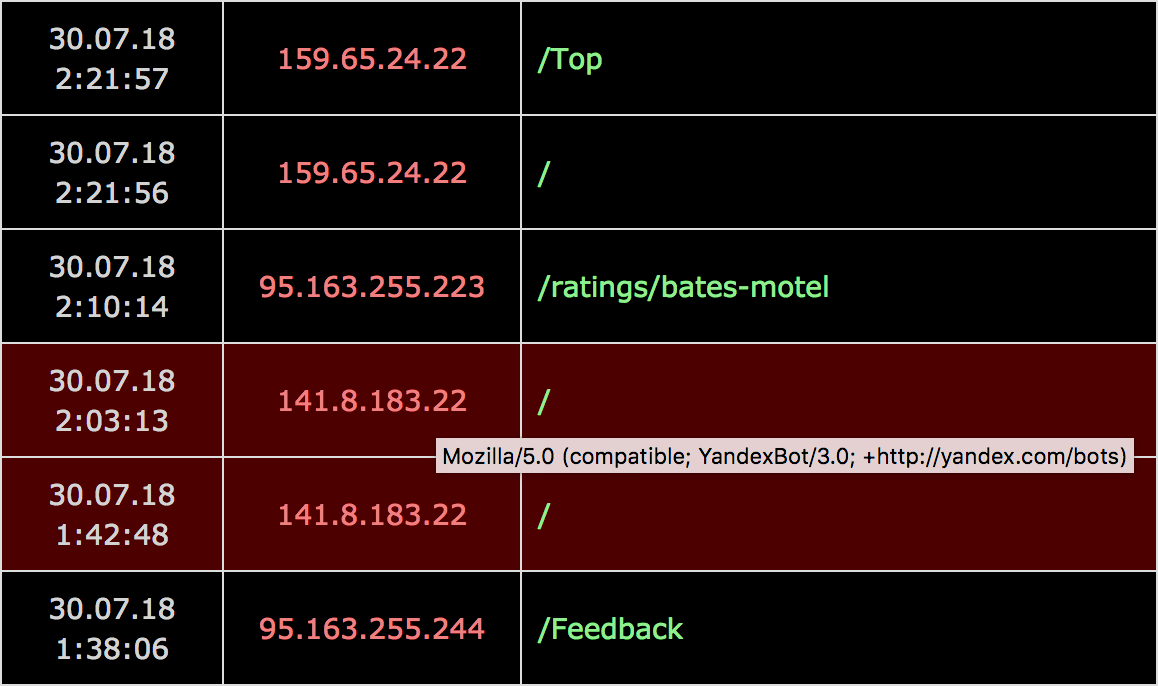

}I decided to save and analyze those anyway. For instance, in my view for analytics I just mark such requests with a different color:

By the way, looking at statistics so far, bots/crawlers from Yandex are requesting my website several fucking times per hour. Google bots are much more humble in that regard.

Bonus part

Some interesting requests

I added server-side analytics to my website just a couple of days ago, but I can already see some interesting requests in the collected data:

/a2billing/admin/Public/PP_error.php?c=accessdenied

/wp-includes/js/wpdialog.js

//xmlrpc.php?rsd

//wp-includes/wlwmanifest.xml

//blog/wp-includes/wlwmanifest.xml

//wordpress/wp-includes/wlwmanifest.xml

//wp/wp-includes/wlwmanifest.xml

//site/wp-includes/wlwmanifest.xml

//cms/wp-includes/wlwmanifest.xml

/index.php?c=58

/viewtopic.php?t=9377&dl=names&spmode=full

/viewtopic.php?p=17478

/viewtopic.php?p=13965

/viewtopic.php?t=20277

/manager/index.php

/viewforum.php?f=927

/viewforum.php?f=915

/viewforum.php?f=1132

//connectors/system/phpthumb.php

//libs/js/iframe.js

//dbs.php

/.idea/WebServers.xml

/.ssh/id_dsa

/.ssh/id_ecdsa

/.ssh/id_ed25519

/id_dsa

/.ssh/id_rsa

/winscp.ini

/WinSCP.ini

/id_rsa

/filezilla.xml

/FileZilla.xml

/sitemanager.xml

/WS_FTP.ini

/ws_ftp.ini

/WS_FTP.INI

/.vscode/sftp.json

/deployment-config.json

/ftpsync.settings

/.ftpconfig

/.remote-sync.json

/.vscode/ftp-sync.json

/lfm.php

/.env

/sftp-config.jsonSilly twats just assume there is Wordpress or some other PHP-based CMS (what else, right?) behind the website and they try to open commonly known pages (with random IDs?). So far I didn’t see /wp-admin among those, which is surprising, but I guess it’s just a matter of time before I get it.

Headers override

By the way, since we’ve touched the subject of middlewares. You can actually override some response headers to make the life of silly twats even more miserable by adding the following middleware:

// rewrite some headers for more security

app.Use(async (context, next) =>

{

context.Response.OnStarting(() =>

{

// if Antiforgery hasn't already set this header

if (string.IsNullOrEmpty(context.Response.Headers["X-Frame-Options"]))

{

// do not allow to put your website pages into frames (prevents clickjacking)

context.Response.Headers.Add("X-Frame-Options", "DENY");

}

// check MIME types (prevents MIME-based attacks)

context.Response.Headers.Add("X-Content-Type-Options", "nosniff");

// hide server information

context.Response.Headers.Add("Server", "ololo");

// allow to load scripts only from listed sources

//context.Response.Headers.Add("Content-Security-Policy", "default-src 'self' *.google-analytics.com; style-src 'self' 'unsafe-inline'; script-src 'self' 'unsafe-inline'");

return Task.FromResult(0);

});

await next();

});Note, however, that if you have a reverse proxy web-server, then most likely it will send its name in the Server header anyway (so you need to change it there).

Updates

2020-05-20 | GoAccess

A perhaps easier way of analyzing visitors on your website would be to parse your web-server logs with a special tool, such as GoAccess.

Social networks

Zuck: Just ask

Zuck: I have over 4,000 emails, pictures, addresses, SNS

smb: What? How'd you manage that one?

Zuck: People just submitted it.

Zuck: I don't know why.

Zuck: They "trust me"

Zuck: Dumb fucks